I Built the Same Automation in Make.com and n8n in One Day - Here's What I Learned

How a side-by-side comparison of two popular automation platforms revealed their true strengths and weaknesses.

TL;DR

I spent one day building identical RSS content curation workflows in both Make.com and n8n to settle the platform debate. The result? Both work, but they serve completely different audiences. Make.com excels at simplicity and speed for business users, while n8n offers transparency and flexibility for technical teams. The choice depends on your team composition and complexity needs, not platform superiority.

Key Finding: The platforms are so different in approach that "which is better" is the wrong question. The right question is "which fits your team and use case."

The Business Problem

Content curation is a time sink. Every week, I was manually checking multiple AI/ML RSS sources, reading dozens of articles, and summarizing key insights for strategic planning. This process consumed 3-4 hours weekly and delivered inconsistent results depending on my available time and focus.

The challenge: automate content discovery and initial analysis while maintaining quality and strategic focus.

Requirements:

- Monitor multiple RSS sources daily (Papers With Code, arXiv, HuggingFace, AWS ML Blog, OpenAI Blog, etc.)

- Filter for recent content (24-48 hours)

- Generate strategic summaries using AI

- Output structured data for human review

- Handle varying content volumes and feed failures

- Maintain cost-effectiveness

Expected ROI: 3+ hours weekly time savings, improved consistency, and scalable content monitoring.

The Experiment Design

Rather than pick one platform and stick with it, I decided to build the same workflow in both Make.com and n8n to understand their real-world differences.

Timeline:

- Morning (4 hours): Complete Make.com implementation

- Afternoon (4 hours): Replicate functionality in n8n

- Testing: One source at a time, then full deployment

- Result: Both platforms operational with selective routing

This approach provided direct comparison data rather than theoretical platform analysis.

Make.com Implementation: The Business User's Dream

What Worked Brilliantly

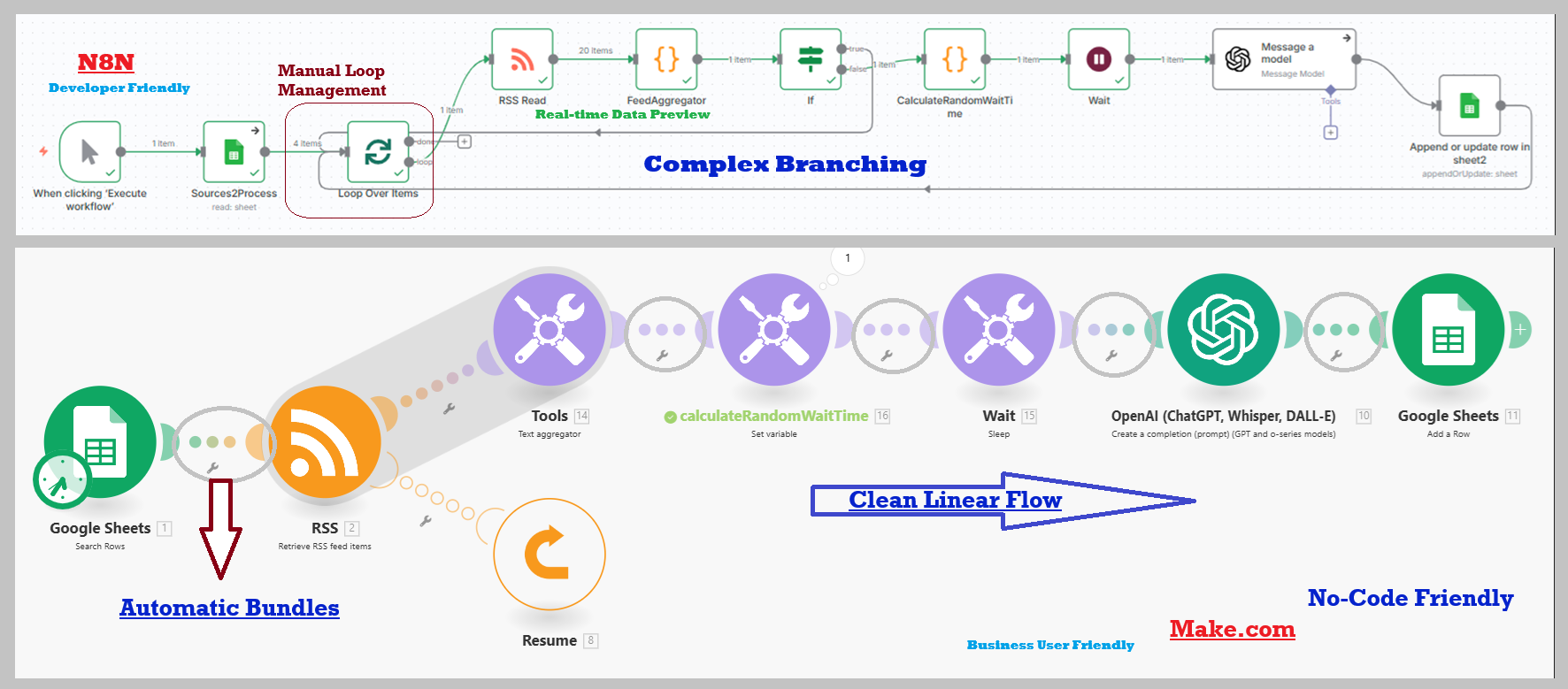

Visual Simplicity: The workflow reads like a flowchart. Google Sheets → RSS → Text Aggregator → OpenAI → Output. Non-technical team members immediately understood the logic.

Automatic Bundle Processing: Make.com's killer feature. When processing multiple RSS sources, each source automatically flows through the entire workflow independently. No manual loop configuration required.

No-Code Approach: Configuration over coding. Setting up the RSS aggregation required filling in templates and selecting options, not writing functions.

Speed to Deploy: Four hours from concept to production, including testing. The platform guided me through setup with clear options and examples.

Where Make.com Struggled

Debugging Opacity: When something broke, I had to run the entire workflow to see where it failed. Limited visibility into data transformations during development.

Syntax Learning Curve: The expression system ({{1.data}}, {{2.title}}) requires understanding position-based references and function syntax.

Reliability Quirks: Occasional unpredictable behavior that required workflow restarts or module reconfiguration.

Make.com Cost Analysis

- Starter Plan: $9/month (1,000 operations)

- Pro Plan: $16/month (10,000 operations)

- My Usage: ~270 operations/day = 8,100/month (Pro plan needed)

- Per-Operation Cost: ~$0.002

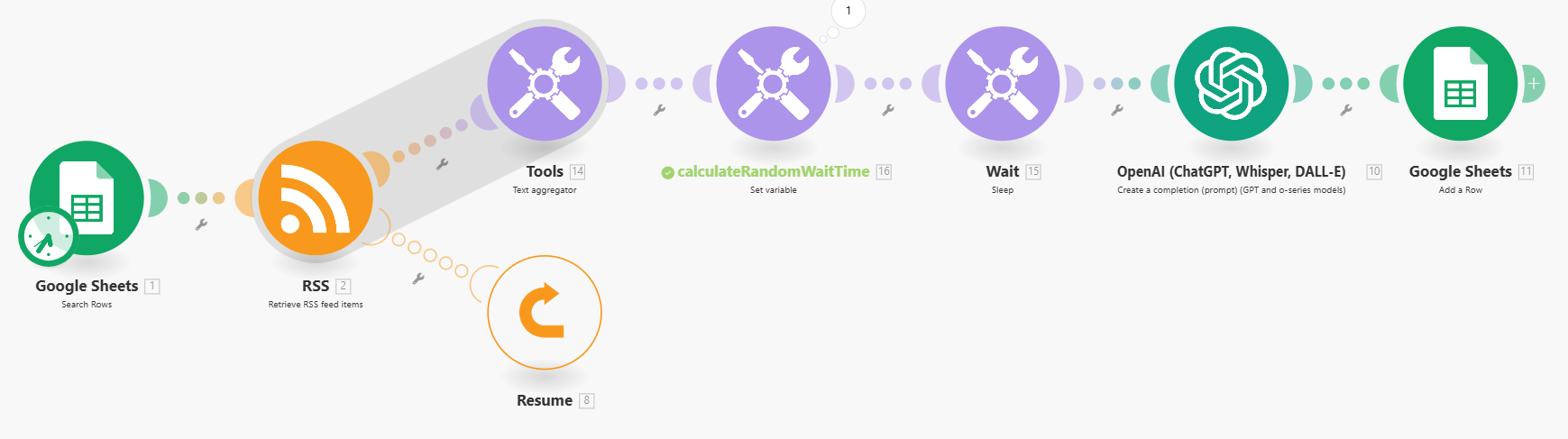

n8n Implementation: The Developer's Toolkit

What Impressed Me

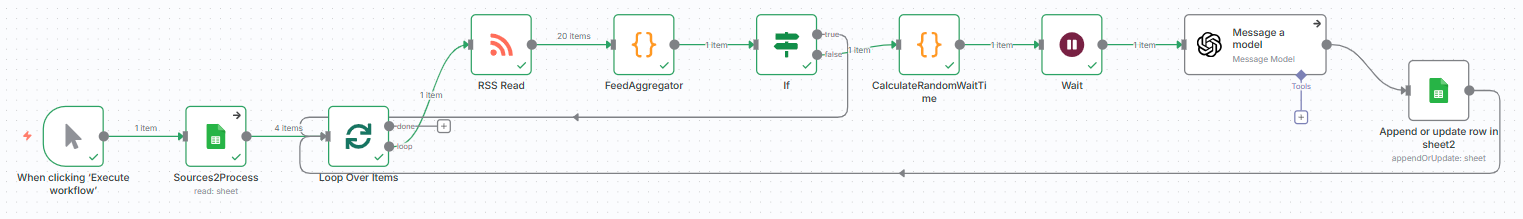

Real-Time Data Preview: The standout feature. Every node shows exactly what data flows through it. Debugging became trivial compared to Make.com's black box approach.

Transparency: I could see precisely how data transformed at each step. This visibility accelerated development and troubleshooting significantly.

Flexibility: JavaScript-based expressions provided unlimited customization. Complex data manipulation that would require multiple Make.com modules became single function nodes.

Reliability: More predictable behavior. When something worked in testing, it worked in production.

Where n8n Demanded More

Visual Complexity: The workflow looked intimidating with explicit loops, conditional branches, and complex data routing. Non-technical users would struggle.

Manual Loop Management: Required explicit "Loop Over Items" configuration to handle multiple RSS sources. What Make.com did automatically, n8n required manual setup.

Technical Knowledge: Creating the date filtering and content aggregation required JavaScript programming. Not accessible to business users.

n8n Cost Analysis

- Cloud Plan: $20/month (2,500 executions)

- Self-Hosted: Free (requires server management)

- My Setup: Self-hosted on AWS Lightsail ($10/month server cost)

- Effective Cost: $10/month + server management time

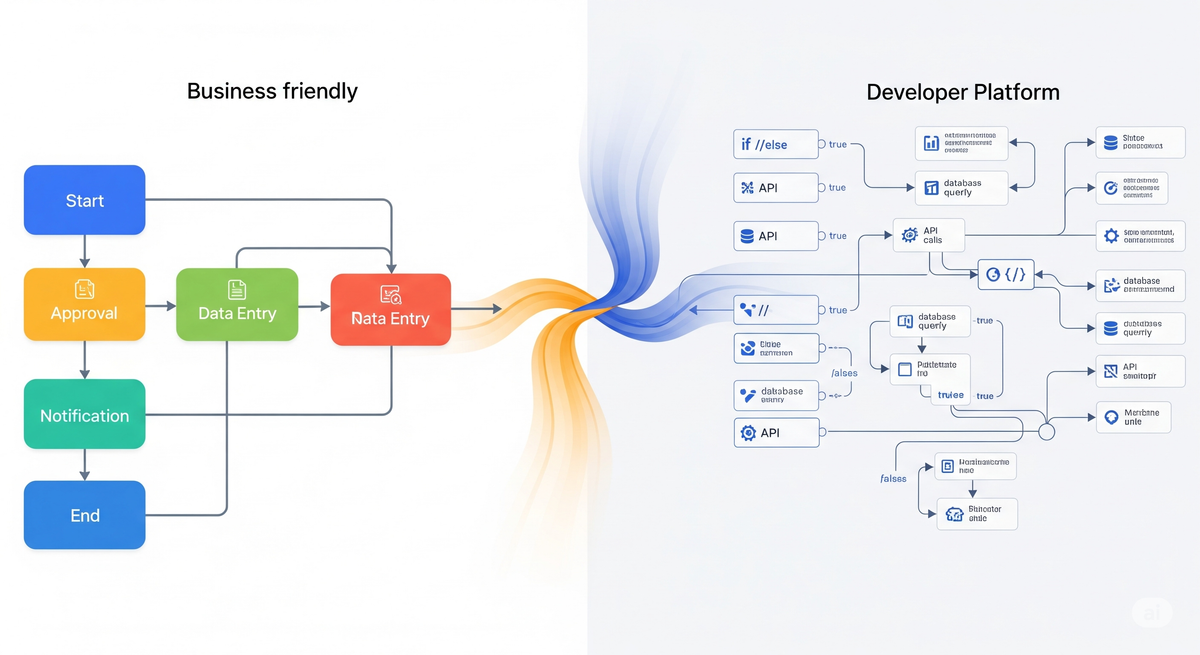

Side-by-Side Comparison: The Real Differences

Data Processing Philosophy

Make.com: Automatic bundle processing. Multiple data items flow through the workflow independently without manual configuration.

n8n: Manual loop management. Requires explicit iteration setup but provides complete control over data flow.

Development Experience

Make.com: Configuration-focused with limited development visibility. Faster initial setup but harder to debug.

n8n: Code-friendly with extensive debugging capabilities. Slower initial setup but faster troubleshooting.

Error Handling

Make.com: Built-in retry mechanisms and automatic error routing. Less control but more automated recovery.

n8n: Manual error handling using conditional nodes. More setup required but complete control over error scenarios.

Cost-Benefit Analysis

Total Cost of Ownership (Monthly)

Make.com:

- Platform cost: $16 (10,000 operations limit)

- Development time: 4 hours initial

- Maintenance: 1 hour/month

- Monthly equivalent: ~$25

- Cost scaling: Linear with operation count - heavy usage can become expensive

n8n (Self-Hosted):

- Platform cost: $10 (AWS Lightsail server)

- Development time: 4 hours initial

- Maintenance: 2 hours/month (server + workflow)

- Monthly equivalent: ~$30

- Cost scaling: Fixed regardless of usage volume

The Self-Hosted Advantage

This is where n8n's economics become compelling. With a self-hosted instance, you can run unlimited workflows without per-operation costs. Want to process dozens of RSS sources instead of the initial set? No additional platform fees. Need to run workflows every hour instead of daily? Same fixed cost.

Make.com's operation-based pricing means costs scale directly with usage. Testing new workflows, running multiple variations, or scaling up operations all increase monthly bills.

Real-World Self-Hosting Experience: I initially started with 13 RSS sources but scaled back to multiple sources due to individual feed reliability issues. With n8n's self-hosted model, this experimentation and optimization cost nothing additional. Under Make.com's pricing, each test iteration would have consumed paid operations.

Self-Hosting Implementation: For teams considering the self-hosted route, I've documented the complete server setup process in my n8n Multi-User Server Setup Guide. The implementation creates isolated n8n instances for multiple users on a single AWS Lightsail server using Docker containers with CloudPanel for management, providing each user with their own secure subdomain.

ROI Calculation

- Time saved: 12+ hours/month

- Value at $50/hour: $600/month

- Platform cost: $25-30/month

- Net ROI: 2,000%+ (both platforms)

While both platforms deliver strong ROI, n8n's fixed-cost model provides better economics for scaling and experimentation. Additionally, for organizations in healthcare, financial services, or other heavily regulated industries, n8n's self-hosted deployment offers critical data sovereignty advantages. Sensitive data never leaves your infrastructure, ensuring compliance with regulations like HIPAA, PCI-DSS, or industry-specific data governance requirements that may prohibit cloud-based processing of confidential information.

Decision Framework: Which Platform Should You Choose?

Choose Make.com If:

- Team Profile: Business users, marketers, operations staff

- Workflow Complexity: Linear processes with standard data transformations

- Development Resources: Limited technical expertise available

- Priority: Speed to deployment and ease of maintenance

- Budget: Can absorb higher per-operation costs for simplicity

Choose n8n If:

- Team Profile: Developers, technical operations, engineering teams

- Workflow Complexity: Complex logic, custom data transformations, extensive error handling

- Development Resources: JavaScript knowledge available

- Priority: Transparency, debugging capabilities, cost optimization

- Budget: Can invest technical time for lower operational costs

- Data Sovereignty: Need to keep sensitive data within your own infrastructure

- Regulatory Compliance: Operating in healthcare, financial services, or other heavily regulated industries

- Security Requirements: Must comply with HIPAA, PCI-DSS, SOX, or similar data governance standards

Red Flags for Each Platform

Avoid Make.com If:

- You need extensive debugging visibility

- Workflow logic is highly complex

- Budget is extremely tight

- You require custom data transformations

Avoid n8n If:

- No technical expertise available

- Need immediate deployment

- Team composition is primarily business users

- Maintenance resources are limited

Implementation Lessons Learned

Technical Insights

- Context Management: n8n requires careful attention to data scoping when accessing information from different nodes within loops.

- Token Limitations: Large content aggregations hit AI model limits. GPT-4o's 128K context window was necessary for processing multiple RSS feeds.

- Date Filtering Reality: RSS feeds rarely have same-day content. Flexible date range handling (24-48 hours) proved essential.

- Rate Limiting Strategy: Both platforms support API rate limiting, but n8n's custom implementation provided better control.

- Testing Methodology: Testing one source at a time before full deployment prevented complex debugging scenarios.

Business Insights

- Platform Selection Timing: Choose the platform based on current team capabilities, not aspirational technical skills.

- Maintenance Planning: Factor ongoing maintenance effort into platform decisions. Make.com requires less technical maintenance but costs more operationally.

- Scaling Considerations: n8n scales better with complexity growth; Make.com scales better with team size growth.

- Regulatory Assessment: Evaluate data governance requirements early in platform selection. Organizations in regulated industries may have limited viable options due to compliance constraints.

- Data Sovereignty Planning: Consider where sensitive data will be processed and stored. Self-hosted solutions provide complete control but require infrastructure management capabilities.

- Compliance Cost Analysis: Factor regulatory compliance costs into total cost of ownership. Cloud platform limitations may force expensive architectural compromises or mandate self-hosted deployments.

Real-World Results

Both workflows now run simultaneously, processing RSS sources based on a Google Sheets control system. This dual-platform approach provides:

- Platform Comparison Data: Real operational metrics on reliability, cost, and maintenance

- Risk Mitigation: Redundancy for critical content sources

- Team Flexibility: Business users can use Make.com while technical users optimize n8n workflows

- Migration Path: Gradual transition capability as team composition evolves

Current Status: 30 days operational with 99%+ uptime on both platforms.

The Verdict: It's Not About Better, It's About Fit

The automation platform debate misses the point. Both Make.com and n8n successfully implement complex workflows, but they optimize for different user profiles and use cases.

Make.com succeeds by abstracting complexity away from business users. The platform trades some flexibility and cost efficiency for significant reductions in technical barriers and maintenance overhead.

n8n succeeds by providing complete transparency and control to technical users. The platform trades some accessibility and setup speed for debugging capabilities, operational cost efficiency, and data sovereignty. For regulated industries, n8n's self-hosted model delivers critical compliance advantages that cloud-based alternatives cannot match.

The real insight: platform choice should align with team composition and workflow complexity, not platform features or pricing alone.

What's Next?

This comparative implementation revealed that automation platform selection requires evaluating multiple dimensions beyond just features and pricing. Your next steps depend on your organizational context:

For Regulated Industries:

- Assess compliance requirements first - Determine if data sovereignty is mandatory before evaluating platforms

- Evaluate self-hosting capabilities - Consider whether your team can manage infrastructure alongside automation workflows

- Budget for compliance costs - Factor regulatory requirements into total cost of ownership calculations

For Technical Teams:

- Start with n8n self-hosted - Leverage the unlimited experimentation and cost advantages

- Implement the multi-user server setup - Scale across your team using the documented architecture

- Build compliance-ready foundations - Even if not currently required, establish data sovereignty practices

For Business-First Organizations:

- Begin with Make.com - Rapid deployment for immediate productivity gains

- Plan migration paths - Consider how platform choice might evolve with team capabilities

- Evaluate hybrid approaches - Use both platforms for different workflow types based on complexity and sensitivity

Universal Next Steps:

- Pilot with non-sensitive data - Test automation capabilities before processing regulated information

- Document platform decisions - Create clear criteria for future platform selections

- Plan for platform evolution - Consider how team growth and regulatory changes might affect platform choice

The successful automation strategy matches platform capabilities to organizational realities while planning for future requirements.

Key Takeaway: Stop asking "which platform is better" and start asking "which platform fits our team and use case today."

About This Analysis: This comparison represents real-world implementation experience, not theoretical evaluation. Your results may vary based on team composition, workflow complexity, and specific requirements.